Local Debugging and Testing

Documentation describing methods for running application code in local development environments for debugging and testing methods.

Overview

For AWS Lambda, whether triggered by Amazon API Gateway (Web API) or Event trigger, the data necessary for processing is contained in the first argument event of the handler function. By creating this Event Payload in advance and launching locally, we reproduce Lambda behavior.

When integrating with other AWS services such as Amazon DynamoDB or S3 during processing, AWS authentication information set in the local environment (environment variable access key or ~/.aws/credentials) is used instead of the AWS Lambda execution role.

You can check examples of specifying event payloads below.

-

WebAPI Logic

- Directly specify the request payload of API calls passed from API Gateway (REST API) and execute handler functions.

src/.local.sample_api.get_users.pysrc/.local.sample_api.post_users.py

- Directly specify the request payload of API calls passed from API Gateway (REST API) and execute handler functions.

-

Event Trigger

- Directly specify event payloads passed from S3, SNS, SQS, etc., and execute handler functions.

src/.local.sample_event.import_users.py

- Directly specify event payloads passed from S3, SNS, SQS, etc., and execute handler functions.

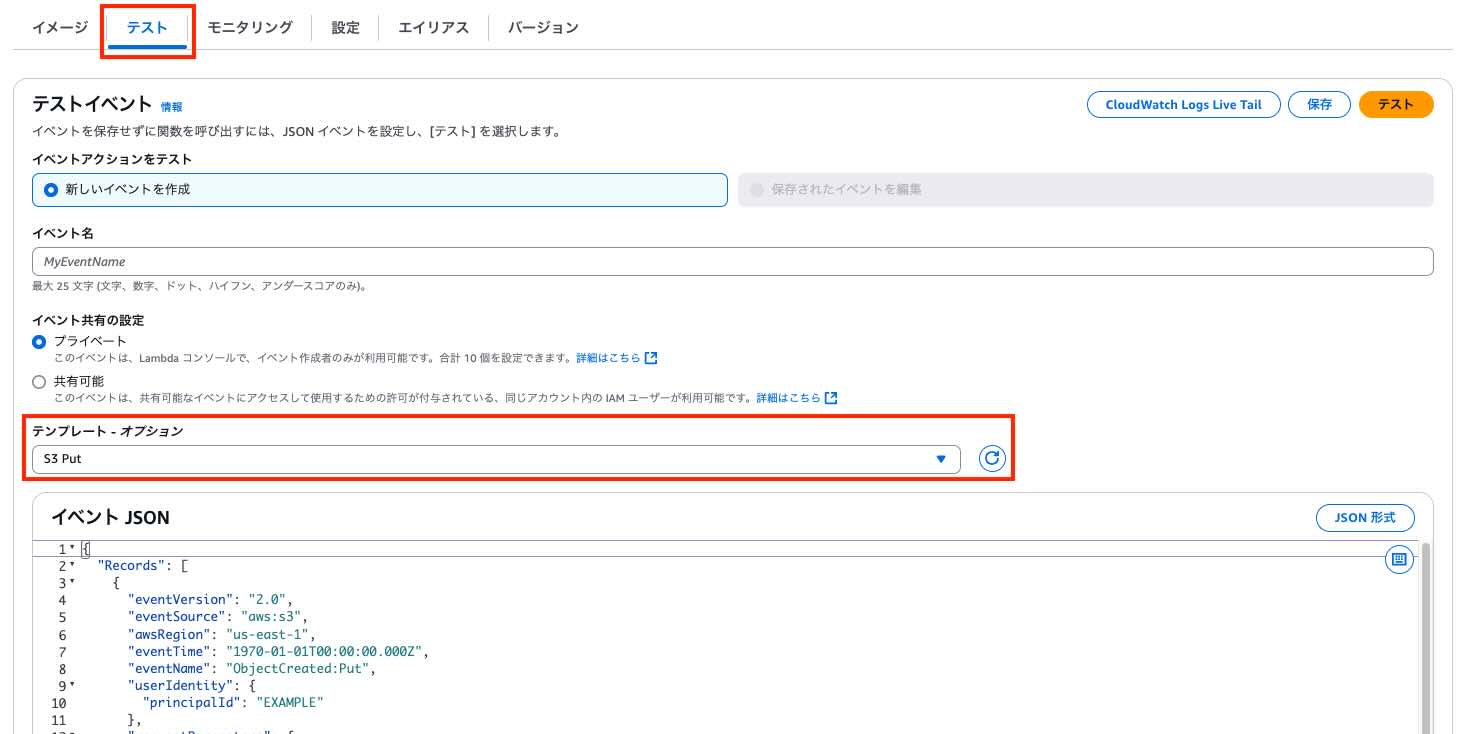

Event payload templates can be obtained from the AWS Lambda console. For S3 events, select "S3 Put", and for API Gateway (REST API), select "API Gateway AWS Proxy".

Local Execution Procedure

- "Local environment" refers to the EC2 instance environment launched with this catalog.

1. Docker Startup

Local development is performed using dedicated python scripts, PostgreSQL containers, and MinIO containers which are S3 compatible storage. First, let's start these containers defined in docker-compose.yaml.

[ec2-user ~]docker compose up -d

2. Table Creation

Create a database and tables in the PostgreSQL started with containers. The following Python script automatically creates the database and users table in the container.

[ec2-user ~]poetry run python ./src/.init_db.py

The PostgreSQL command line tool psql is also installed, so you can use it to manipulate tables in the container. Here we are creating 3 data entries. When prompted for a password, enter password.

[ec2-user ~]psql -h localhost -U admin -d mydb

mydb=# INSERT INTO users (name, country, age) VALUES

('Alice', 'Japan', 30),

('Bob', 'USA', 25),

('Charlie', 'Germany', 35);

mydb=# exit #This returns you to the normal terminal

3. Creating the DynamoDB Table

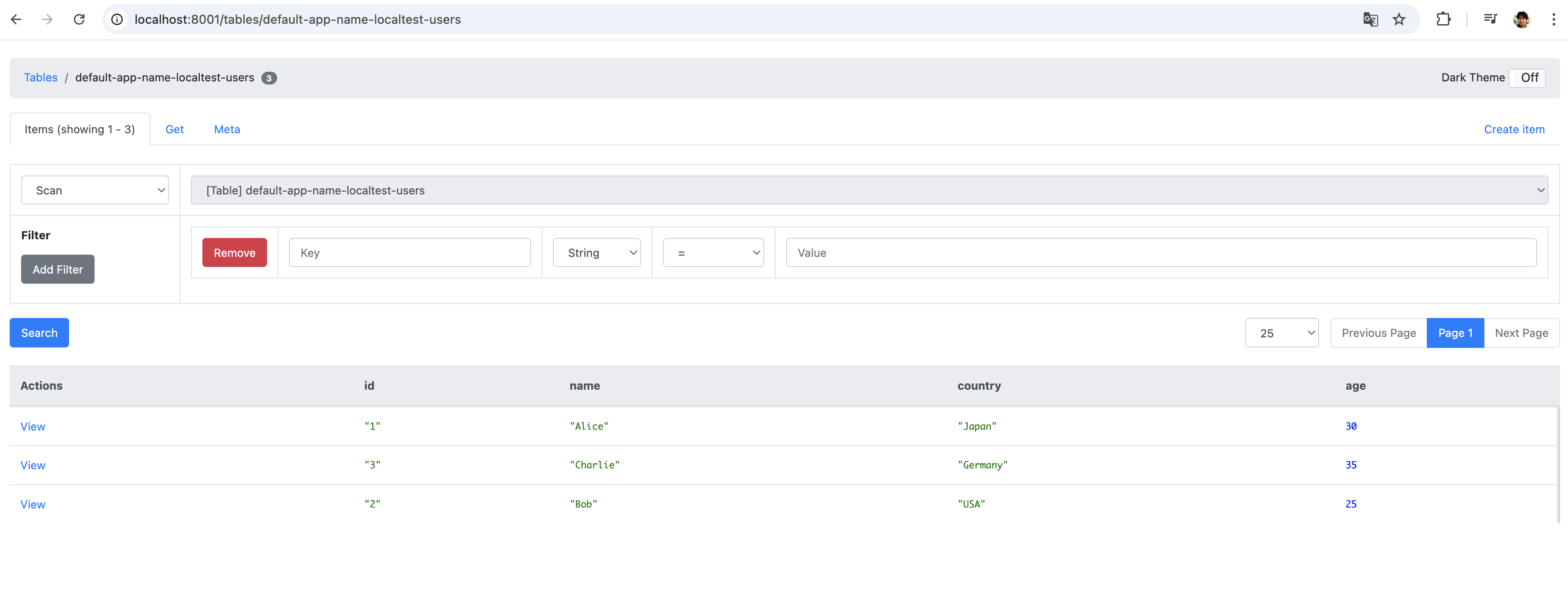

A DynamoDB table and sample data will be created in the DynamoDB Local container. You can run the following script to automatically generate them:

[ec2-user ~]poetry run python src/.init_dynamodb.py

Additionally, the dynamodb-admin container (a GUI tool for DynamoDB Local) is also running, allowing you to view the tables and their contents at http://localhost:8001.

4. Local API Execution

GET API

.local.sample_api.get_users.py is a Python script that simulates an API that retrieves user data with the GET method. It generates pseudo API Gateway events using a function called generate_http_api_request_payload. By changing the following part in the file, you can develop while changing the content sent as query strings from the API.

QUERY_PARAMS = {

'id': '1'

}

[ec2-user ~]poetry run python ./src/.local.sample_api.get_users.py

API Execution Result: {"statusCode": 200, "body": "{\"statusCode\":200,\"body\":{\"name\":\"Alice\",\"country\":\"Japan\",\"age\":30}}", "isBase64Encoded": false, "headers": {"Content-Type": "application/json"}, "cookies": []}

When you actually run the script, you get responses from the API.

POST API

.local.sample_api.post_users.py simulates an API that creates users with the POST method. Similarly, it generates pseudo API Gateway events using a function called generate_http_api_request_payload. This time, development is possible by manipulating the following Body parameters.

BODY = {

"name": "Shigeo",

"country": "Japan",

"age": 30

}

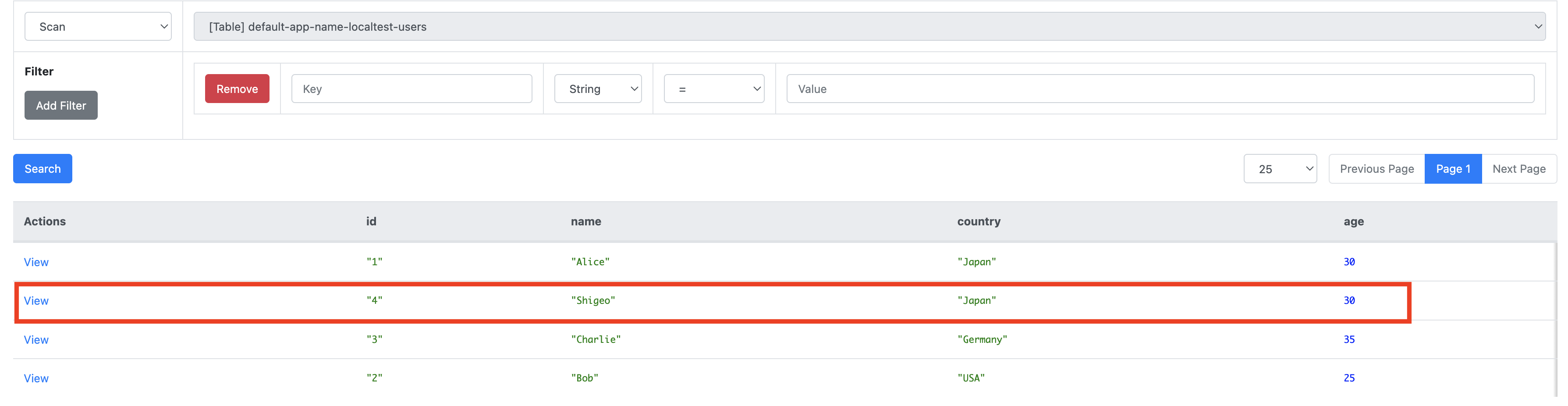

When you actually run the script, you can see that the data set correctly in the Body has been created.

[ec2-user ~]poetry run python ./src/.local.sample_api.post_users.py

API Execution Result: {"statusCode": 200, "body": "{\"statusCode\":200,\"body\":{\"message\":\"The data created successfully.\"}}", "isBase64Encoded": false, "headers": {"Content-Type": "application/json"}, "cookies": []}

$ mydb=# SELECT * FROM users WHERE id=4;

id | name | country | age

----+--------+---------+-----

4 | Shigeo | Japan | 30

(1 row)

The data has also been successfully registered in DynamoDB as well.

5. Local Execution of S3 Events

For S3 events, we test using MinIO, which is S3 compatible storage. Running the following script creates a bucket called test-bucket on MinIO and uploads sample_users.csv.

[ec2-user ~]poetry run python ./src/.init_minio.py

MinIO cannot reproduce directly triggering Lambda. Therefore, generate_s3_request_payload in .local.sample_event.import_users.py generates events when Lambda is triggered, enabling local execution. When you run the script, you can see that the contents of sample_users.csv have been generated in the DB.

[ec2-user ~]poetry run python ./src/.local.sample_event.import_users.py

mydb=# SELECT * FROM users;

id | name | country | age

----+---------+---------+-----

1 | Alice | Japan | 30

2 | Bob | USA | 25

3 | Charlie | Germany | 35

4 | Shigeo | Japan | 30

5 | Taro | Japan | 30

6 | Alice | USA | 28

7 | Bob | UK | 35

8 | Maria | Germany | 25

9 | Li | China | 32

6. Unit Testing

We also provide sample implementations of unit tests. Using pytest, test code is placed under the tests directory. Unit tests similarly use PostgreSQL and MinIO containers to implement test cases that are considered necessary, such as validation patterns and normal patterns.

Tests can be run with the following command.

[ec2-user ~]poetry run pytest

Other Testing Methods

Setting Up Pseudo Environment in Local Environment

For WebAPI logic, you can use SAM Local (start-api) to set up an API server that emulates Amazon API Gateway on localhost.

- SAM Local (SAM CLI)

For Event triggers, you can emulate AWS services in local environments with the following tools for testing, etc.

-

Amazon S3

- MinIO (* Available for use with OSS version)

-

Amazon DynamoDB

- DynamoDB Local (* Official tool distributed by AWS)